HowTo: Create an evolutionary search algorithm

Evolutionary Search Algorithms

Evolutionary Map Pathways uses an evolutionary search algorithm to create pathways through a space. But, what is an evolutionary search algorithm?

An evolutionary search algorithm is a stochastic search algorithm loosely inspired by Darwinian evolution through natural selection.

But, what IS an evolutionary search algorithm?

An evolutionary search algorithm is a way to search through many variations of created content, while keeping the best scored content and removing the lowest scoring content. These types of searches can be used to search through any content space. Here, the search is applied to creating content that is a pathway through the mountains.

Core Idea

An evolutionary search algorithm should be able to search through many instances of content (e.g., a pathway on a map). To do so, the search algorithm needs to first generate a number of instances (e.g., 100) of the content. The first created content is the first generation. The first generated instances can be pure random or curated based on designer input, user input, generation rules, etc.

After generating the first instances, the search algorithm needs to determine a score, also called a fitness value, for each instance. The score can be determined suitable manner based on the content itself. For example, the score can be based on the number of treasure chests on a map, the distance from a starting point to and ending point, etc.

The instances of content are then sorted based on their scores. The highest scoring content can the the best content of this generation of content. The worst half of the instances of content are replaced with copies of the best half of the instances of content. However, it does not need to be halved. For example, the worst 3/4 of the content can be removed, then the best 1/4 of the content can be copied three times, to reach the original number of instances.

Now that the worst content is removed from this generation, the copied instances (e.g., offspring) can be mutated in some way, so that they slightly differ from the best content. This is done to see if any of the mutated content ends up, later, to be better than the current best content (e.g., in the next generation). The copied instances can be mutated randomly or mutated based on probability distributions. For example, a mountain element in a map can be randomly mutated to be an open space, a starting spot, and ending spot, a treasure, or a house.

After replicating the best content and slightly mutating it, the evolutionary search algorithm can continue to process the next generation of content, by scoring, sorting, removing the worst, replicating the best, and mutating the copied content.

The process repeats until a set number of generations have been processed or if a score threshold is reached (e.g., if a instance of content is good enough to end the process).

Implementation

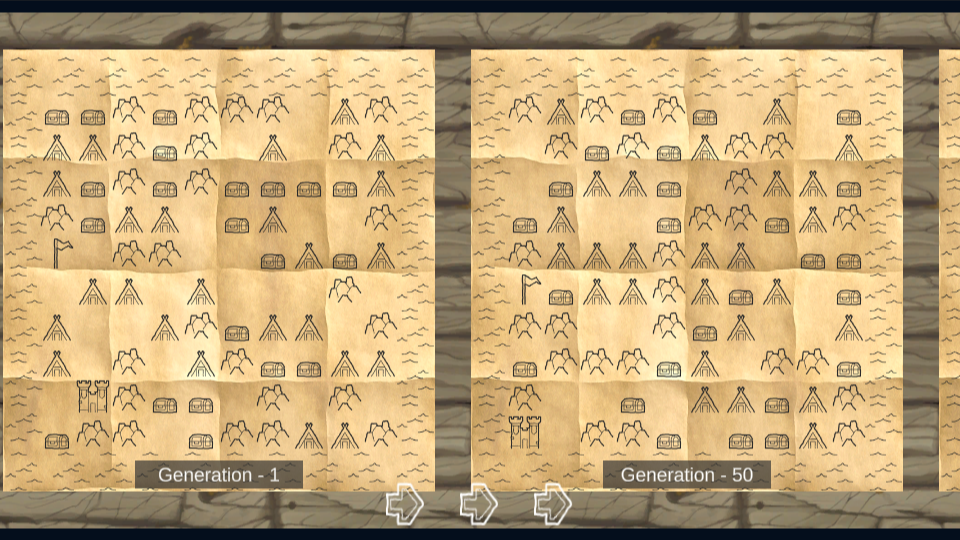

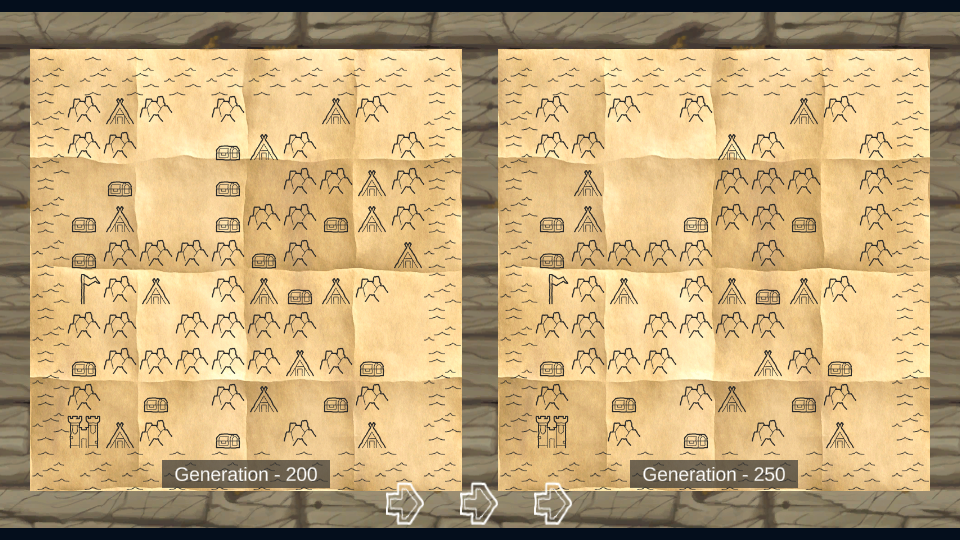

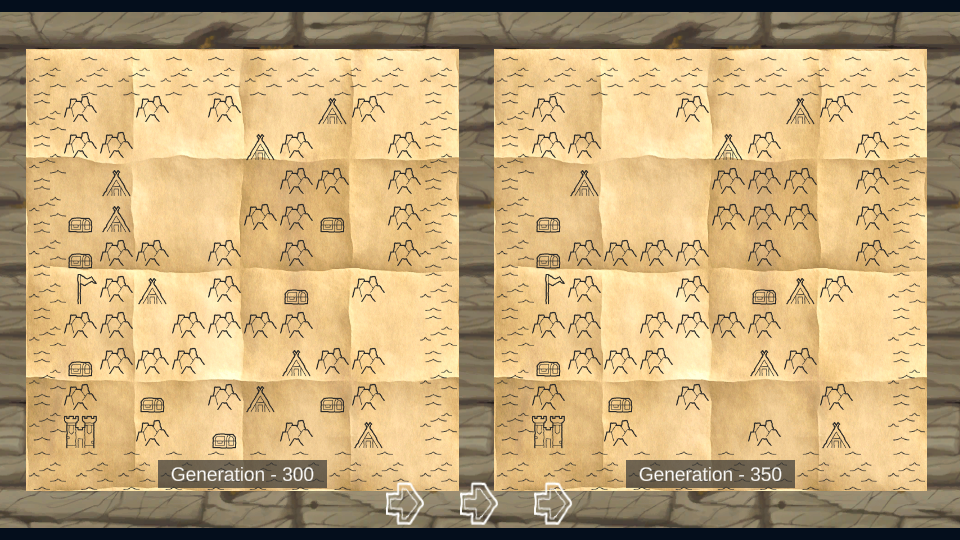

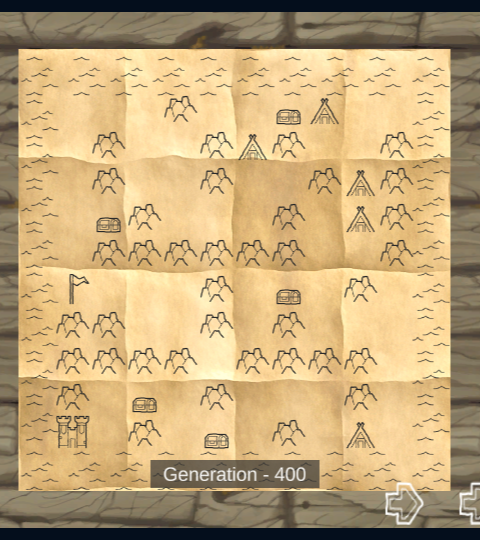

The actual evolutionary search algorithm in Evolutionary Map Pathways is not that different from the core idea above. Each core step is broken down into its components in the subsections below. An example of the changes made to the map over many generations is shown below.

Initialize the Content Population

To initialize the first generation of maps, 400 random maps are created. Each map includes a 10 by 10 integer array where the value of each element corresponds to a drawn element at that point on the map. The drawn elements include an open space, a start position, an exit position, a treasure, an enemy, and a wall. The first generation's integer array is created random except for the placement of only one start position and one exit position. This is not required, but allows the initial generation to start with at least one desirable attribute.

Score the Content

Each map of each generation is scored based on desirable properties. For the maps, the score is based on the number of enemies, the number of treasures, the number of starts, the number of exits, and the distance between the start and the exit.

In particular, the score for a map is decreased based on the difference between the number of treasures and the number of enemies. This promotes an equal number of enemy locations and treasure locations over time in each generation, since having the same number of treasures and enemies results in a higher score.

The score is further decrease if there are more than five treasures. This helps to declutter the map and not have treasure locations everywhere. Also, since the number of treasures is tied to the number of enemies, the number of enemies also tends to five enemy locations.

The score is heavily changed to promote having only one start position and one exit. The score is increased by a large chunk if there is only one start position and decreased if there are more than one start positions. Similar score changes are made for the exit locations.

Finally, the score is changed based on whether or not a "walkable" path is present between the start and exit locations, if there are only one of each. A walkable tile in the integer array that represents the map can include all element types, except for the walls (which are displayed as mountains). An A* pathfinding algorithm determines the Manhattan distance between the start and exit positions. If they connect, then the score is given a large bonus, in order to promote connectivity between start and exit. Further, the score is increased by twice the length of the path, in order to promote long paths over short paths.

The actual implementation used to evaluate the score is included in the code below (written in C#). The Individual class is an instance of the map. The A* algorithm is determined within the StartExitDistance() function.

private static int EvaluationFunction(Individual individual)

{

// Start Score at 0

int score = 0;

// Score is higher if number of treasures and monsters is the same

score -= Mathf.Abs(individual.EnemyCount - individual.TreasureCount);

// Score is lower if there are more than five treasures

if (individual.TreasureCount > 5)

{ score -= individual.TreasureCount; }

// Score is lower if there is more than one start or no starts

if (individual.StartCount == 1) { score += 40; }

else { score -= 40; }

// Score is lower if there is more than one exit or no exits

if (individual.ExitCount == 1) { score += 40; }

else { score -= 40; }

// Score is higher if the distance between the start and the exit is longer

// Run A Star protocol

if (individual.ExitCount == 1 && individual.StartCount == 1)

{

int distanceScore = StartExitDistance(individual);

// Add an extra score for connecting the start and end else remove score

if (distanceScore > 3) { score += 100; }

else { score -= 40; }

score += distanceScore * 2;

}

return score;

}

Sort the Content

After determining the score for every individual map in the generation, the maps and corresponding scores can be sorted. The highest scoring maps are placed first, while the worst map is last. There are many different ways to sort the content. I used premade sort functions in C#.

public static (Individual[], int[]) SortIndividuals(Individual[] population, int[] scores)

{

// Sort the scores and population in ascending order of the scores

Array.Sort(scores, population);

// Reverse the scores to be in descending order

Array.Reverse(scores);

Array.Reverse(population);

return (population, scores);

}

Replace the Worst Content

Once the maps are sorted based on their score, the worst half of the maps can be replaced with copies of the best half of the maps. This can be implemented simply by deleting the worst maps and duplicating the best maps. I iterated through the list of maps as follows:

public static Individual[] CreateOffspring(Individual[] population, int mu, int lambda)

{

Individual[] clonePopulation = (Individual[])population.Clone();

for (int i = 0; i < mu; i++)

{

// Remove the worst half of individuals

// Replace the worst half with copies of the best half

// Using a copy constructor

clonePopulation[i + mu] = new Individual(clonePopulation[i]);

}

for (int j = mu; j < mu + lambda; j++)

{

// Mutate the copies

clonePopulation[j].Mutate(clonePopulation[j]);

}

return clonePopulation;

}

Mutate the Offspring

After copying the best maps, the copies can be mutated. This is where an "evolutionary" process comes in. The copied maps can be considered to be offspring of the best maps. The new offspring can be slightly different than their parent map.

To mutate the offspring, a number of elements of the map's integer array are randomly changed to a different value (e.g., a wall is changed to an open space). By changing only 1 element, small changes in a good map can be explored. However, sometimes only changing 1 element in all of the offspring can result in all attempts to create a better map being the same or oscillating between different bad maps (e.g., gradient decay). In order to help avoid this problem, each offspring has a chance to either mutate only 1 element or mutate 5 elements. By changing an offspring by 5 elements at a time, larger differences in good maps can be explored, scored, and compared. In particular, there is a 30% chance that an individual map mutates 5 elements rather than 1 element.

After mutating the offspring, the above scoring, sorting, and replacement steps can occur for the next generation of maps. The process can repeat until the score of one of the maps is high enough (e.g., greater than a predefined threshold) or a set number of generations have been evaluated.

public void Mutate(Individual indiv)

{

int numberToMutate;

if (Random.value < 0.7f)

{

numberToMutate = 1;

}

else

{

numberToMutate = 5;

}

List<Vector2> elementsToMutate = GenerateRandomLocationList(numberToMutate);

for (int i = 0; i < numberToMutate; i++)

{

ElementType oldType = (ElementType)indiv.ElementGrid[(int)elementsToMutate[i].x,

(int)elementsToMutate[i].y];

ElementType newType = Utility.GetRandomEnum<ElementType>();

while (oldType == newType)

{

newType = Utility.GetRandomEnum<ElementType>();

}

indiv.ElementGrid[(int)elementsToMutate[i].x, (int)elementsToMutate[i].y] = (int)newType;

}

}

Epilogue

I hope my explanation of evolutionary search algorithms and my implementation of one is clear. These types of algorithms have many uses. Even the one provided here can be tweaked for different use cases. Additionally, this implementation is separated from the visuals used to represent the created content. For example, the created maps can easily be graphically changed to generally represent mazes, dungeons, race tracks, etc.

Feel free to reach out if you have any questions or comments!

Evolutionary Map Pathways

Evolutionary Search Algorithm Creates Path Through Map

| Status | In development |

| Category | Tool |

| Author | Gogodinosaur |

| Tags | 2D, algorithm, evolutionary, Generator, map, Procedural Generation, search, unit |

Leave a comment

Log in with itch.io to leave a comment.